TL;DR: Learning to walk from pixels in the real world by using proprioception as supervision.

Abstract

In this work, we show how to learn a visual walking policy that only uses a monocular RGB camera and proprioception to walk. Since simulating RGB is hard, we necessarily have to learn vision in the real world. We start with a blind walking policy trained in simulation. This policy can traverse some terrains in the real world but often struggles since it lacks knowledge of the upcoming geometry. This can be resolved with the use of vision. We train a visual module in the real world to predict the upcoming terrain with our proposed algorithm Cross-Modal Supervision (CMS). CMS uses time-shifted proprioception to supervise vision and allows the policy to continually improve with more real-world experience. We evaluate our vision-based walking policy over a diverse set of terrains including stairs (up to 19cm high), slippery slopes (inclination of 35 degrees), curbs and tall steps (up to 20cm), and complex discrete terrains. We achieve this performance with less than 30 minutes of real-world data. Finally, we show that our policy can adapt to shifts in the visual field with a limited amount of real-world experience.

Visual Plasticity: The Prism-Adaptation Experiment

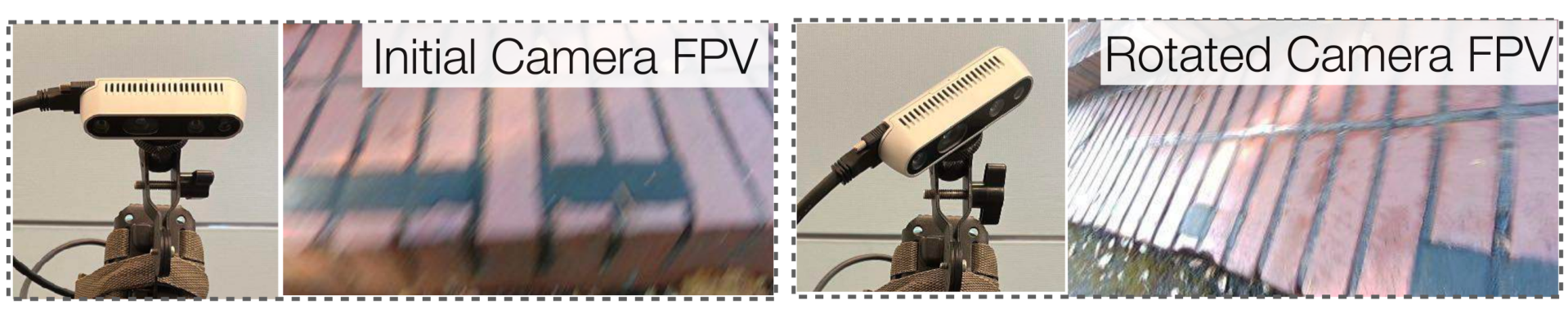

We study how quickly the policy can adapt to shifts in the visual field. To do so, we change the camera orientation. This results in a large variation in the field of view, as shown in the image below. Note that after rotation, the robot cannot see the terrain in front of it.

Before shifting the camera's visual field (pre-test), the policy can climb the testing staircase perfectly. However, after rotating the camera, the visual policy stumbles on stairs and drifts in the horizontal direction (exposure). After only three trials (approximately 80 seconds of data), the policy can again anticipate steps and walk without drifting (adaptation).

In the final session of the experiment (post-test), we switch back the camera to its original position. We observe that after training on only two trials, the policy can re-adapt to the original visual field.

Generalization Results

We show that our vision-based policy can walk on previously unseen terrains.

Generalization Results: Trip to Stanford

After training our robot on a large set of terrains in the Berkeley campus, we verified generalization of the visual policy in the Stanford campus.

Blind vs Visual Locomotion

We show that our policy is better than the blind policy in several environments.

Bibtex

@inproceedings{loquercio2023learning,

title={Learning visual locomotion with cross-modal supervision},

author={Loquercio, Antonio and Kumar, Ashish and Malik, Jitendra},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

pages={7295--7302},

year={2023},

organization={IEEE}

}

Acknowledgements:

This work was supported by the DARPA Machine Common Sense program and by the ONR MURI award N00014-21-1-2801. We would like to thank Sasha Sax for the helpful discussions, Noemi Aepli for support with media material, and Haozhi Qi for support with the website creation.